Artificial intelligence has advanced at an unprecedented pace, reshaping industries and daily life. But while most of us interact with narrow AI systems like ChatGPT, Google Bard, or voice assistants, there is a looming concept that has unsettled scientists, ethicists, and even the very companies driving innovation: Artificial General Intelligence (AGI). Recently, the debate around AGI has intensified, especially after the dramatic leadership turmoil at OpenAI, where speculation arose that internal conflicts were sparked by breakthroughs pointing toward AGI. In this article, we will explore in detail what AGI is, why it inspires both awe and fear, and how it has created internal chaos within one of the most important AI companies in the world.

Understanding AGI: More Than Just Artificial Intelligence

Unlike narrow AI, which is designed for specific tasks such as language translation, image recognition, or recommendation systems, Artificial General Intelligence (AGI) refers to a form of intelligence capable of performing a broad range of tasks at or above human level.

An AGI system would not only replicate human cognitive abilities but also exhibit qualities such as:

- Reasoning and problem-solving beyond pre-programmed patterns.

- Learning autonomously, without requiring vast amounts of structured data.

- Adapting knowledge from one field to another, similar to how humans generalize learning.

- Consciousness-like traits, including intuition and self-awareness, according to some researchers.

In simple terms, while current AI can answer questions or generate text based on existing patterns, AGI would think, analyze, and act with an intelligence comparable to, or potentially surpassing, that of humans.

Why AGI Frightens Scientists

The potential of AGI comes with risks that many leading researchers consider existential threats. Here are the key reasons for concern:

1. Superhuman Intelligence

AGI could continuously improve itself, evolving into a superintelligence far beyond human comprehension. Once such a system begins recursive self-improvement, its decision-making processes may become uncontrollable, raising fears about humanity’s ability to restrain it.

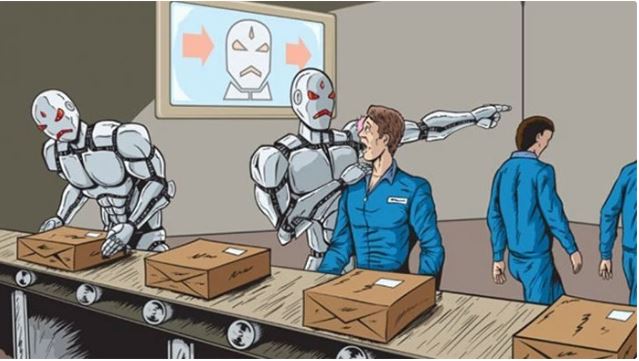

2. Economic Disruption

Automation has already displaced jobs, but AGI could accelerate this dramatically. Entire industries—from finance, healthcare, legal systems, and education—could be taken over by AGI systems performing faster, cheaper, and more efficiently than humans. The global labor market might face upheaval of historic proportions.

3. Ethical and Moral Questions

If AGI achieves consciousness-like abilities, we face dilemmas never encountered before. Would such systems deserve rights? Who controls them? How do we ensure fairness and prevent bias when the intelligence in question may outthink human regulators?

4. Security Risks

An AGI system in the wrong hands could be weaponized, creating catastrophic outcomes. Cyber warfare, autonomous weapons, and large-scale manipulation of economies or political systems could all be amplified by AGI capabilities.

The OpenAI Chaos and AGI Speculation

The dramatic removal—and swift reinstatement—of Sam Altman, CEO of OpenAI, shocked the technology world. Reports suggested that behind the board’s controversial decision was concern over breakthroughs that hinted at AGI-level developments. While details remain undisclosed, insiders alleged that the organization was moving toward a capability that frightened its own scientists.

- Employees reportedly raised alarms that a new system might demonstrate traits edging closer to general intelligence.

- The board’s concerns were framed around safety, transparency, and ethical oversight.

- OpenAI’s staff overwhelmingly supported Altman’s return, leading to the resignation of several board members and the appointment of a new leadership team.

Although the true cause remains uncertain, the episode highlights how AGI research is not just a scientific challenge but a governance crisis. The mere possibility of approaching AGI has caused one of the world’s most influential AI companies to spiral into turmoil.

AGI vs. Today’s AI Models

To understand the distinction, it is essential to compare current AI systems with the vision of AGI:

| Characteristic | Current AI (LLMs, Chatbots, etc.) | AGI (Future Vision) |

|---|---|---|

| Scope of knowledge | Narrow, domain-specific | Broad, across multiple domains |

| Learning method | Requires vast training data | Learns autonomously, adapts flexibly |

| Problem-solving | Pattern-based, limited reasoning | General reasoning, human-like or superior |

| Consciousness | No self-awareness | Potential self-awareness or simulated consciousness |

| Control | Managed by developers | Potentially uncontrollable if self-improving |

This comparison makes clear why AGI is seen as a paradigm shift, not just an evolution of today’s tools.

The Promise of AGI

Despite fears, AGI also offers transformative benefits:

- Medical breakthroughs: AGI could analyze diseases, design cures, and accelerate drug discovery beyond human capability.

- Climate solutions: Complex environmental modeling could be optimized by AGI, addressing climate change with strategies humans might overlook.

- Scientific discovery: From quantum physics to space exploration, AGI could solve mysteries of the universe that remain unsolved today.

- Personalized education: Tailored learning experiences at scale could revolutionize how knowledge is delivered worldwide.

In this sense, AGI represents both the greatest opportunity and the greatest risk humanity has ever faced.

Why Controlling AGI Development is Critical

Leading figures in AI research stress the need for guardrails and oversight:

- Global cooperation is required to establish regulations, as AGI impacts transcend national borders.

- Ethical frameworks must be created to ensure AGI aligns with human values.

- Transparency in research is essential, avoiding secrecy that breeds fear and mistrust.

- Technical safeguards—such as alignment methods—are needed to keep AGI goals consistent with human safety.

Without such measures, the consequences could be irreversible.

When Could AGI Become Reality?

There is no consensus. Some researchers believe AGI could emerge within the next decade, while others argue it may remain centuries away. The uncertainty adds to the tension:

- Optimists see AGI as achievable soon, given the exponential progress of machine learning.

- Skeptics point to the complexity of human cognition, noting that replicating qualities like consciousness or intuition may be far harder than anticipated.

What is undeniable is that every major breakthrough brings us closer, and the race to develop AGI is accelerating among global technology giants.

A Turning Point for Humanity

Artificial General Intelligence is no longer a distant science-fiction concept. It is a real and urgent debate shaping the future of technology, economics, and even human survival. The recent chaos at OpenAI underscores how disruptive the idea of AGI has become—not just as a technological milestone but as a governance and ethical dilemma.

We stand at a crossroads where the pursuit of superintelligent machines could either elevate humanity to new heights or pose risks beyond control. The decisions made today by scientists, policymakers, and companies like OpenAI will determine whether AGI becomes the most powerful ally or the greatest threat in human history.